Neuromarketing Applications of Neuroprosthetic Devices: An Assessment of Neural Implants’ Capacities for Gathering Data and Influencing Behavior

In Business Models for Strategic Innovation: Cross-Functional Perspectives, edited by S.M. Riad Shams, Demetris Vrontis, Yaakov Weber, and Evangelos Tsoukatos, pp. 11-24 • London: Routledge, 2018

ABSTRACT: Neuromarketing utilizes innovative technologies to accomplish two key tasks: 1) gathering data about the ways in which human beings’ cognitive processes can be influenced by particular stimuli; and 2) creating and delivering stimuli to influence the behavior of potential consumers. In this text, we argue that rather than utilizing specialized systems such as EEG and fMRI equipment (for data gathering) and web-based microtargeting platforms (for influencing behavior), it will increasingly be possible for neuromarketing practitioners to perform both tasks by accessing and exploiting neuroprosthetic devices already possessed by members of society.

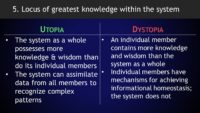

We first present an overview of neuromarketing and neuroprosthetic devices. A two-dimensional conceptual framework is then developed that can be used to identify the technological and biocybernetic capacities of different types of neuroprosthetic devices for performing neuromarketing-related functions. One axis of the framework delineates the main functional types of sensory, motor, and cognitive neural implants; the other describes the key neuromarketing activities of gathering data on consumers’ cognitive activity and influencing their behavior. This framework is then utilized to identify potential neuromarketing applications for a diverse range of existing and anticipated neuroprosthetic technologies.

It is hoped that this analysis of the capacities of neuroprosthetic devices to be utilized in neuromarketing-related roles can: 1) lay a foundation for subsequent analyses of whether such potential applications are desirable or inappropriate from ethical, legal, and operational perspectives; and 2) help information security professionals develop effective mechanisms for protecting neuroprosthetic devices against inappropriate or undesired neuromarketing techniques while safeguarding legitimate neuromarketing activities.